When search engines like Google visit your website, they do not check every page at once. They use a limited amount of time and resources, called a crawl budget, to decide how many pages to visit and how often to come back. In simple words, crawl budget is how many pages search engines are willing to crawl on your site in a given period.

Crawl budget matters for SEO because it affects how quickly and how often your pages are discovered and updated in search results. If search engines spend their crawl budget on unimportant, duplicate, or broken pages, they may ignore or delay crawling the pages that really matter to you.

For beginner SEO learners, understanding crawl budget is like learning how a librarian chooses which books to check first. When you manage this budget well, search engines can focus on your most valuable pages, such as key articles, product pages, or important guides. This helps improve your chances of appearing in search results and keeps your content fresh and easy to find.

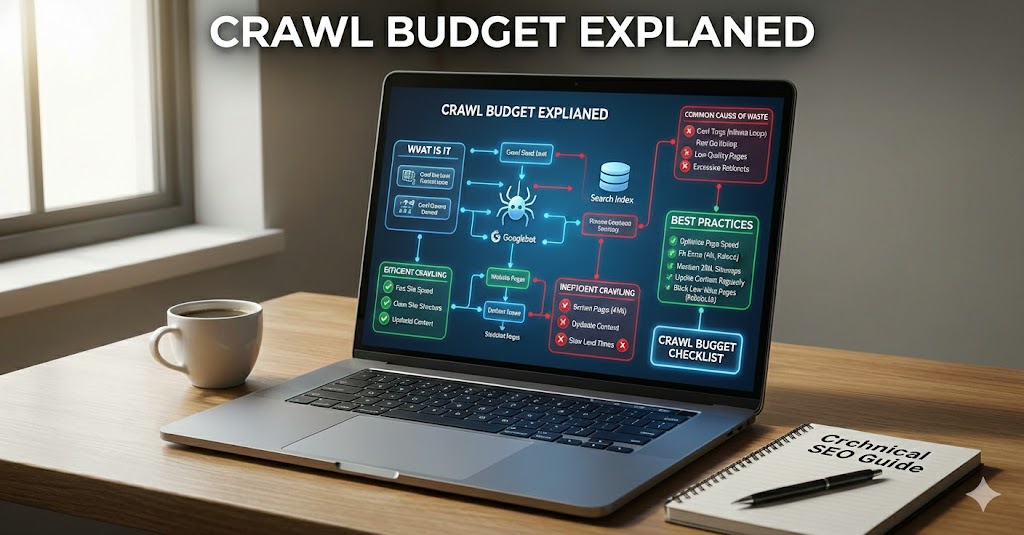

Crawl Budget Explained

Before digging into technical details, it helps to picture how crawl budget works in everyday terms. Search bots cannot visit every page of every site all the time, so they must choose carefully where to spend their limited attention.

Imagine a delivery truck with limited fuel visiting many houses in one day. It must choose which streets to drive first and which stops to skip. Search bots work in a similar way when they visit your site.

In technical terms, crawl budget is the practical limit of URLs a search engine will request from your website in a set time. It is shaped by how often the bot wants to visit you and how much load your server can safely handle without slowing or breaking.

This limited allowance is important because it decides which pages get checked and refreshed. If many visits are spent on login URLs, filters, endless calendar pages, or old test sections, fewer resources remain for your key content like guides, products, or service pages.

For beginner SEO learners, managing this budget means gently guiding bots toward useful, unique, and well-structured pages. Over time, that helps important content be discovered earlier, updated faster, and shown more reliably in search results.

Introduction to Crawl Budget Explained

Once you understand the basic idea of crawl budget, the next step is to see how it behaves in real life. The way search engines choose what to visit on your site can explain why some pages get attention quickly while others are overlooked.

Have you ever wondered why some of your new pages appear in search results quickly, while others seem to sit unnoticed for weeks? That difference is often linked to how smartly search engines spend their limited crawling resources on your site.

In this part, we move from simple definitions to understanding how this allowance behaves in real situations. Instead of only thinking about “how many pages get visited,” you will start to see which sections attract crawling attention and which areas quietly drain it.

At a basic level, this topic is about priorities. Search bots are constantly choosing between pages that bring fresh value and pages that repeat, waste time, or lead to errors. When your structure, links, and content gently point bots toward your best work, that invisible budget shifts toward high-impact URLs instead of low-value paths and dead ends.

What Is Crawl Budget?

After seeing how crawl budget affects real crawling behavior, it is useful to return to the core definition. Thinking of your website like a place bots must explore makes the concept even clearer.

Think of your site as a small city and search bots as inspectors with limited time. They can only visit a certain number of “addresses” before they must move on to another city.

In simple terms, crawl budget is the maximum number of URLs a search engine is likely to request from your website within a specific time. It is not a public number you can see, but it behaves like a quiet limit that controls how far and how deep the bot will go.

This allowance is shaped by two main ideas: how often the bot wants to come back and how many requests your server can safely handle. When both are in balance, important pages are seen more often, while low-value sections get less attention.

For beginner SEO learners, the key is to know that crawl budget is about the pages that actually get requested, not just the pages that exist. The more you remove endless, low-value paths, the more room you create for useful content to be crawled.

Crawl Budget Explained: Why It Matters for SEO

Knowing what crawl budget is only becomes powerful when you see its impact on visibility and traffic. How quickly search engines notice your changes can influence how well your SEO efforts perform.

Imagine publishing a great new page, but search engines take weeks to notice it. That delay often happens because their limited resources are being spent in the wrong places on your site.

When this hidden allowance is used wisely, it becomes a quiet boost for your whole SEO strategy, helping important pages get attention sooner and more often.

The main value of a healthy crawl budget is that high‑priority URLs are discovered and refreshed faster. That means new product launches, updated prices, or fresh articles can appear in results sooner, while outdated or low‑value areas receive less focus.

Good management also supports cleaner indexing. By reducing waste on duplicate URLs, infinite filters, and error pages, you help search engines build a clearer picture of your site, which can support more stable rankings over time.

- Quicker visibility for new or updated pages that matter for traffic or revenue.

- More crawling capacity available for evergreen guides, key categories, and core landing pages.

- Less noise from technical clutter that might confuse how your site is understood.

Key Factors That Control Crawl Budget Explained

Once you see why crawl budget matters, the next question is what actually influences it. Several quiet signals tell search engines how much of their time your site deserves and where to spend it.

Why do search engines visit some sites more often and some only once in a while? Behind this difference stand a few quiet rules that shape how much attention your pages receive.

In this part, you will see the main elements that control how many URLs get crawled and how frequently bots return. Knowing these levers helps you avoid waste and guide crawlers toward pages that truly matter.

The first influence is overall site size and structure. Large websites with many categories, filters, and archives create more URLs, which can quickly eat into the available allowance. Clear navigation, simple URL patterns, and limited “infinite” paths make it easier for bots to cover your key sections.

Another important driver is technical health. Fast page load, stable servers, and low error rates tell crawlers they can safely request more pages without causing problems. Slow responses, timeouts, and frequent 5xx errors send the opposite signal and can reduce visit depth.

- Site speed and server reliability encourage bots to crawl more.

- Content quality and uniqueness help crawlers focus on valuable URLs.

- Internal links and sitemaps highlight which pages deserve priority.

Crawl Budget vs Indexing

After exploring what affects crawl budget, it is important to separate crawling from what happens next. Being visited by a bot does not guarantee that a page will appear in search results.

Have you ever seen a page in your server logs that was visited by a bot, yet it never appears in search results? This gap between being checked and being shown is where crawl budget vs indexing becomes important.

In basic terms, crawling is discovery, while indexing is storage and selection. Bots use your crawl budget to request URLs and read their content. After that, a separate system decides whether each page is useful enough to keep in the search index. A URL can be crawled many times and still never be indexed if it is thin, duplicated, blocked, or low quality.

This means more crawling does not automatically mean better rankings. The real goal is to have important, unique pages both crawled and indexed. For beginner SEO learners, the key is to guide bots toward strong pages and avoid wasting this limited allowance on pages that are unlikely to be stored or shown in results.

Crawl Budget Explained: Common Issues That Waste It

Understanding the difference between crawling and indexing makes it easier to spot where your budget disappears. Many sites lose a large share of their allowance on pages that add almost no value.

Have you ever felt like your best pages are being “ignored” while pointless ones keep getting visited? Often, this happens because hidden problems are quietly burning through your limited crawling allowance.

In this part, you will see the most common patterns that drain crawl budget on beginner sites. By spotting these early, you can stop bots from wandering in circles and guide them toward pages that truly matter.

One major cause of waste is duplicate URLs. Small changes like tracking codes, uppercase vs lowercase, or trailing slashes can create many addresses that all show the same content. Search engines may crawl each version, spending time on copies instead of fresh pages.

Closely related are messy URL parameters such as ?sort=latest, &color=red, or &page=999. When every filter, sort, or search creates a new URL, bots can fall into almost infinite combinations that add little or no value.

Another frequent sinkhole is thin or low‑value pages. Very short posts, empty category archives, or near‑duplicate product entries can all be crawled again and again without helping your site. Over time, this shifts attention away from deeper, higher‑quality content.

Technical mistakes also consume budget. Long chains of redirects, many 404 not found responses, and broken internal links force bots to request URLs that lead nowhere. Each failed attempt is one less chance for an important page to be discovered.

- Duplicate URLs from tracking codes or case changes.

- Uncontrolled parameters that generate endless variations.

- Thin content with little unique information.

- Broken links and errors that waste crawl attempts.

Basic Ways to Improve Crawl Budget for Beginners

Once you know what wastes crawl budget, you can start making simple improvements. Even small, non-technical changes can help search engines spend more time on the pages that matter most.

Have you ever wished that search engines would find your best pages first instead of getting lost in less useful corners of your site? By making a few simple changes, you can gently steer crawlers toward the areas that matter most. The ideas below stay beginner‑friendly and focus on steps you can apply even without deep technical skills.

A helpful starting point is to clean up obvious waste. Remove or update pages that are outdated, empty, or nearly identical to others. When there are fewer weak URLs, bots can spend more of their limited attention on strong, unique content such as main categories, detailed guides, and key product pages.

Next, improve how those important pages are connected. Simple tweaks like adding clear internal links from your homepage and main menus, or creating a tidy HTML sitemap, make it easier for crawlers to reach deep sections without wandering through endless filters or archives. You are quietly telling bots, “these URLs matter most.”

Finally, protect your allowance by blocking low‑value areas that you do not want in search. For example, you can ask crawlers to avoid login pages, test sections, and endless search results using tools like robots.txt or simple meta robots rules. Combined with regular checks for broken links and long redirect chains, these basics help more of your crawl budget land where it can actually support your SEO goals.

Putting Crawl Budget into Practical SEO Action

Bringing all these ideas together turns crawl budget from a theory into something you can use day to day. With a clearer view of how bots move through your site, each improvement becomes more intentional.

Understanding crawl budget explained in simple terms turns it from a hidden technical idea into a practical tool you can manage. You now know it is about how many pages search engines choose to visit, which pages get seen first, and how this affects what finally appears in search results.

Instead of trying to control every crawl, your goal is to make smart choices that guide bots: keep your structure clear, focus on useful, unique content, and reduce duplicate, broken, or endless URLs that quietly waste this limited allowance. Over time, these small steps help more of your important pages get discovered and refreshed faster.

As you continue learning SEO, remember that crawl budget is one part of a bigger picture. Good content, solid technical health, and clear navigation all work together. Start with the basic actions you can manage today, observe how search engines react, and gradually build on that progress so crawl budget becomes a simple, everyday part of how you improve your site.