When people talk about SEO, you will often hear the phrase duplicate content. This simply means that the same or very similar text appears in more than one place on the web. These places can be on the same site or on different sites, and the pages can look almost the same to both users and search engines.

Duplicate content is a common SEO concern because search engines want to show users the best and most helpful result, not many copies of the same thing. When there are many matching versions of a page, search engines can get confused about which one to show, which one to rank higher, and which one to save in their index.

For beginners, it is important to know that most duplicate content is not spam. It often happens by accident, due to normal site features and settings. Still, understanding how and why it appears helps you keep your pages clear, simple, and easy for search engines to understand, so your site can perform better over time.

Duplicate Content Explained

Before you can solve duplicate content issues, it helps to see how search engines view similar pages. This section builds on the basic idea and explains what duplication looks like in practice, both within one site and across many domains.

Imagine two books on a shelf with almost the same title, cover, and story. Which one should a librarian suggest first? Search engines face a similar choice when they see many pages that look nearly alike.

In simple SEO terms, duplicate content appears when large parts of text are the same, or very close, across different pages. These can be pages on one domain (often called internal duplicates) or across many domains (often called external duplicates), and both can affect how clearly a site’s information is understood.

- Internal versions: the same article reachable at several URLs inside one site.

- External versions: one guide copied and re-published on other sites without big changes.

For beginners, the key idea is that search engines prefer a clear “main” version. When many near-clones exist, ranking power can be spread too thin, and visibility may drop even if the content itself is useful.

Introduction to Duplicate Content Explained

Once you know what duplicate content is, the next step is understanding why it matters in real searches. This part bridges the simple definition with the behind-the-scenes choices search engines make every day.

Have you ever searched for something and opened two different pages that almost repeat the same words? This kind of overlap is at the heart of duplicate content issues, and it quietly shapes which pages rise to the top of search results.

In this part of the guide, we move from simple definitions to how these near-copy pages really affect a site. You will see how similar blocks of text can appear for normal, everyday reasons and what that means for visibility, clicks, and traffic.

Behind the scenes, search engines compare many pages that look alike, then try to pick just one main version. They consider things like URL structure, where the text was first seen, and how other pages link to it. When these signals send mixed messages, rankings can weaken even if the content itself is helpful.

- Clear “main” pages help search engines understand your site.

- Uncontrolled duplicates can dilute authority and reduce impressions.

What Is Duplicate Content in Simple Terms?

After seeing how search engines compare similar pages, it helps to return to a plain-language view. This section restates the idea of duplication in everyday terms so the core concept is easy to remember.

Think about hearing the same short story read out loud on three different radio stations. The tale does not change, only the place where you hear it. That is very close to how duplicate content works on the web.

In everyday language, it simply means that large parts of text are reused on more than one page, so the pages look almost the same to search engines. The words do not need to match line by line; even very close copies can count as duplicates when they repeat the main idea and structure.

On a basic level, search systems are trying to form a clean map of the internet. When many similar pages appear, they must decide which one is the main version worth showing, and which ones are extra copies. This choice affects how a page can gain trust, links, and space in search results over time.

- Short repeated blocks like headers or menus are usually fine and expected.

- Whole paragraphs or full articles reused across pages are more likely to cause confusion.

Types of Duplicate Content Explained

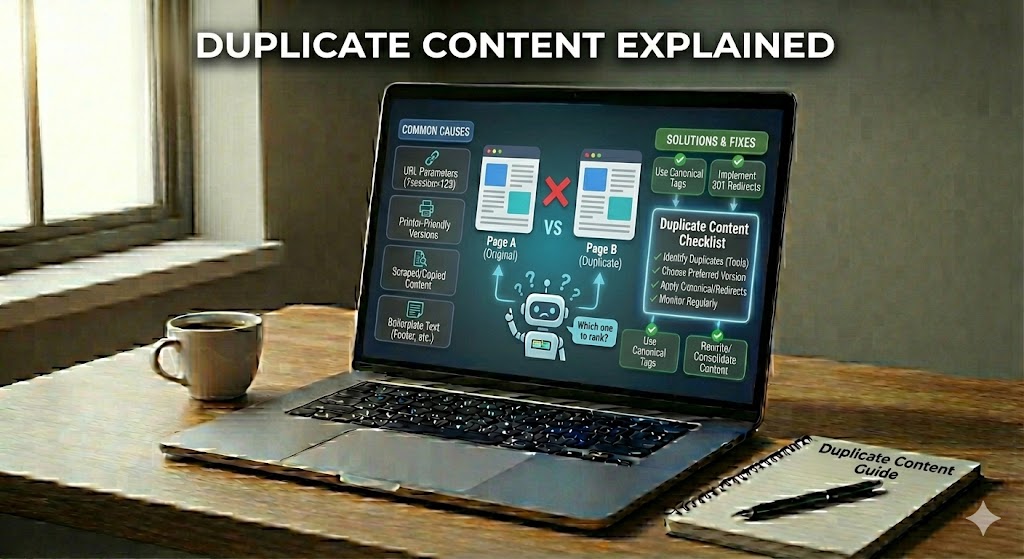

Knowing the definition is useful, but recognizing how duplicates are created is what helps you spot them on your own site. This section walks through the most frequent patterns so you can identify and manage them early.

Have you ever noticed that the “same” page can load with slightly different web addresses? To people, it feels identical, but to search engines, each version can look like a new page, which can quietly create hidden duplicates in the background.

This part looks at the most common patterns that cause repeated pages, so you can spot them early and keep your site structure easy to read for crawlers and users alike.

One frequent source is URL variations. Small changes such as extra tracking codes, session IDs, or switching between a trailing slash and no slash can all produce separate URLs that show the same content. Over time, this can spread signals across many look‑alike pages instead of one clear, strong version.

Another pattern comes from printer‑friendly pages. Many sites create a clean “print” version of an article at a different URL, but the text is almost the same as the main page, so search engines must decide which one to keep as the main copy.

E‑commerce layouts often add even more versions through product filters and parameters. Color filters, sorting options, or price sliders can all change the URL while leaving most of the text and images the same, which quickly multiplies near‑duplicate category pages.

Finally, there is copied content across domains, where the same article, product description, or guide is republished on other sites with few or no edits. This can happen with supplier feeds, guest posts, or simple copy‑paste reuse and makes it harder for search engines to choose the canonical source.

- URL tweaks that do not change the main text.

- Print views of articles sharing the same body copy.

- Filter URLs on store pages showing similar item lists.

- Reused articles spread across many domains.

Why Duplicate Content Is a Problem for SEO

Once you can spot duplicates, the natural next question is how they affect performance. This section connects those patterns to the practical SEO issues they create for rankings, crawling, and indexing.

Imagine a teacher getting three homework sheets with almost the same answer. Which one should get the best grade? Search engines face a similar puzzle when many pages look alike, and this can quietly weaken a site’s performance.

The biggest challenge is confusion for search engines. When several URLs show nearly the same text, crawlers may struggle to pick a clear main page. That lack of clarity can lead to less stable rankings and wasted crawling time.

There is also the issue of split ranking signals. Links, clicks, and other signals might spread across many copies instead of building up one strong version. Over time, that can make every page a bit weaker in search results.

Lastly, repeated pages can cause indexing problems. Some useful URLs may not be stored or refreshed as often if the system thinks they add nothing new. This is why keeping one obvious, main version of each important page helps your whole site appear clearer.

Does Duplicate Content Cause Penalties?

Understanding the risks naturally leads to worries about penalties. This section clarifies what actually happens when search engines detect repeated text, so you can focus on real priorities instead of myths.

Many site owners worry that any repeated text will bring a harsh “SEO punishment.” In reality, the situation is more gentle, and understanding it can remove a lot of fear.

Most of the time, duplicate content does not trigger a direct manual penalty. Instead, search systems usually filter or fold similar pages together so that only one version appears strongly in results. The cost is often lost visibility, not a formal punishment.

Problems start when repetition is used in a clearly manipulative way, such as creating many low‑value copies purely to grab extra rankings. In those rare cases, quality teams may apply an action that looks like a penalty because many pages lose traffic at once.

- Normal, accidental duplication → usually filtered, not punished.

- Heavy, spammy copying → can lead to a manual action.

- Main risk → weaker rankings because signals are spread out.

How to Fix and Prevent Duplicate Content Explained

Knowing that duplication mostly leads to filtering, not instant penalties, sets the stage for action. This section focuses on straightforward steps you can take to clean things up and avoid repeating the same mistakes.

Ever wished search engines would stop guessing which of your pages matters most? Cleaning up repeats is how you give them that clear answer and protect the work you already put into your site.

This part focuses on simple, practical actions you can take to reduce confusion, keep one strong version of each page, and avoid creating new duplicates in the future.

To fix existing problems, start by choosing one preferred URL for every important page. If other addresses show the same text, you can point them to the main one with 301 redirects so visitors and crawlers both end up in the right place. Where removal is not possible, adding a canonical tag tells search engines which version you want treated as the primary copy.

Small habits also help a lot. Use a consistent URL structure (for example, always HTTPS, one choice of WWW or non‑WWW, clear rules for trailing slashes) and avoid creating separate pages for tiny changes like sort orders or print views when a single page can do the job.

Prevention is often easier than repair. When you publish new content, check that no older page already covers the same topic in almost the same words. If you must reuse text, such as legal disclaimers or product specs from a supplier, surround it with unique explanations, tips, or examples that add real value.

Good internal linking supports this work. Regularly link to your chosen main versions from menus, sitemaps, and in‑text links, so search engines see them as the central hubs. Over time, this combination of clear URLs, careful reuse, and thoughtful links keeps duplicate content under control and makes your site easier to understand.

Related SEO Topics and Next Steps

Once duplicate content is under control, other SEO basics become easier to manage. This section points you toward closely connected areas that all support a clearer, more focused site.

Once you understand how repeated text can confuse crawlers, it becomes easier to see other areas that shape how your pages appear in search. Learning a few connected ideas will help you build a cleaner, stronger site over time.

Below are several closely linked SEO topics you can explore next. Each one adds another piece to the puzzle of how search engines read, index, and rank your pages.

- Crawl budget optimization – making sure search bots spend time on your most important pages instead of endless look‑alike URLs.

- XML sitemaps – giving crawlers a clear list of preferred pages so the canonical versions are easy to find.

- Robots meta tags and robots.txt – controlling which URLs can be crawled or indexed to reduce noise.

- Site architecture and internal linking – structuring menus and links so main pages stand out as central hubs.

- URL design and parameters – planning clean, stable addresses that avoid creating endless filter and sort variations.

- Content pruning and consolidation – merging thin, similar pages into a single, stronger resource.

- Structured data markup – adding clear, machine‑readable signals that support the chosen main version of a page.

- Page experience and core quality signals – improving speed, layout, and usefulness so the selected version deserves to rank.

Bringing Duplicate Content Under Control

All of these ideas come together in one goal: making your site easy for search engines to interpret. This final section sums up the main takeaway so you can move forward with confidence.

Understanding duplicate content is really about giving search engines one clear story for each important page. When your site avoids many near‑copies, it is easier for crawlers to see what matters most, keep strong versions in the index, and show them to the right people.

For beginners, the main lesson is simple: most duplication is normal, not evil, but it still needs guiding. By spotting repeated pages, choosing a main version, and using tools like 301 redirects and canonical tags wisely, you help search systems spend less time guessing and more time rewarding your best work.

As you keep learning related topics such as crawl budget, URL design, and site architecture, you will see how they all connect back to this idea of clarity instead of clutter. Start with a few careful checks on your own pages, fix the most obvious overlaps, and build new content with uniqueness in mind—step by step, you can keep duplicate content understood, managed, and under control.